Tech giant Samsung is seeing a huge hit to their Q2 profits which has already hit other tech chip makers. Wimbledon is trying to make a more fair AI system while France moves ahead to stop hate speech which included fines for Facebook violations.

Samsung Electronics Co. said Friday its operating profit for the last quarter likely fell more than 56% from a year earlier amid a weak market for memory chips.

The South Korean tech giant estimated an operating profit of 6.5 trillion won ($5.5 billion) for the April-June quarter, which would represent a 56.3% drop from the same period last year.

The company said its revenue likely fell 4% to 56 trillion won ($48 billion), but did not provide a detailed account of its performance by business division. It will release a finalized earnings report later this month.

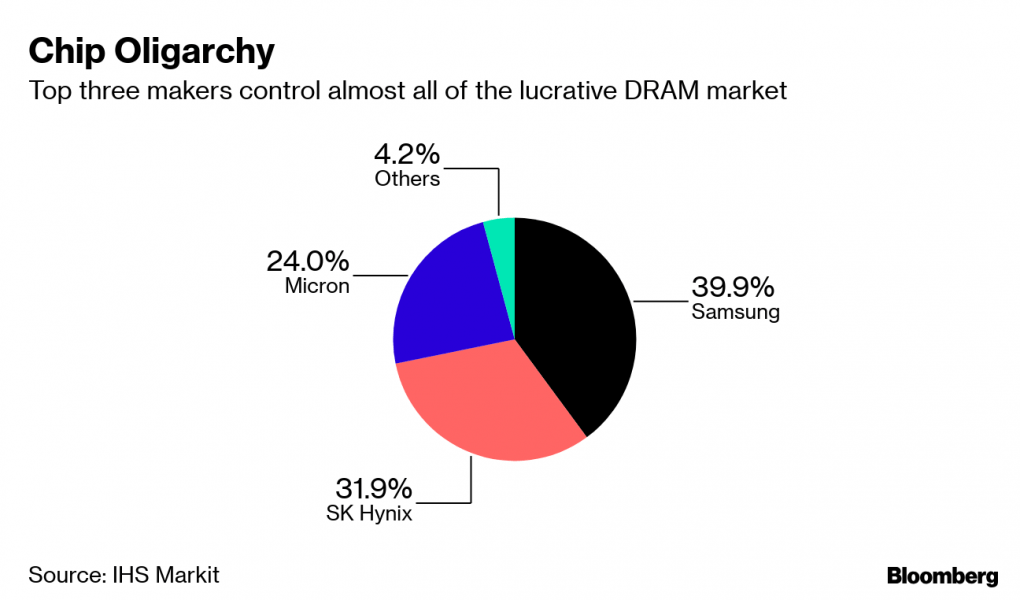

Analysts say falling prices of DRAM and NAND memory chips are eating into the earnings of the company, which saw its operating profit during the first quarter drop more than 60% from last year. U.S. sanctions on Chinese technology giant Huawei may have also contributed to Samsung’s profit woes by reducing its chip shipments and pushing down chip prices farther.

Samsung, which is the world’s biggest maker of semiconductors and smartphones and a major producer of display screens, is also bracing for the impact of tightened Japanese controls on exports of high-tech materials used in semiconductors and displays.

The Seoul government sees the Japanese measures, which went into effect on Thursday, as retaliation against South Korean court rulings that called for Japanese companies to compensate aging South Korean plaintiffs for forced labor during World War II. Seoul plans to file a complaint with the World Trade Organization.

Friday also saw shares of semiconductor makers suffer after the Samsung announcement.

Wimbledon Attempts AI Fairer

Efforts to make artificial intelligence fairer now extend to Wimbledon’s courts.

The All England Club, which hosts the famed British tennis event, is adding technology enhancements at this year’s tournament aimed at eliminating bias from computer generated video highlights.

The club has already been using AI to go through hours of footage and automatically pick out the best shots from matches played on its 18 courts. The AI chooses the moments based on criteria including whether a player does a fist pump and how much the audience cheers after a point. Fans can then watch the assembled videos online and players can use them to review their performance.

For this year’s tournament, which runs until July 19, the AI has been tweaked to balance out any favoritism shown to a player who gestures more or has a louder fan base. The goal is to make sure an equally skilled yet more reserved opponent gets similar exposure.

“Just because you don’t pump your fist doesn’t mean it’s not a good shot,” said Sam Seddon, a client executive at IBM, the club’s technology provider.

Tech bias has come into focus lately amid growing awareness that artificial intelligence systems aren’t neutral tools but reflect society because they’re programmed by humans. Other, more serious examples include facial recognition that misidentifies darker-skinned people and financial algorithms that charge higher interest rates to Latino and black borrowers. Some U.S. lawmakers are calling for tighter scrutiny that includes subjecting big companies to an “algorithmic accountability” test of their high-risk AI systems.

Seddon said IBM’s Watson artificial intelligence system scans video footage and scores it on crowd cheering, player gestures and how important each point is, to give matches an “excitement ranking” to find the best clips.

Those scores are now adjusted to smooth out any bias because otherwise “you could potentially have a situation whereby not all of the best moments of a match are identified,” Seddon said.

France Blocks Internet Hate Speech

French lawmakers have approved a measure that is intended to force search engines and social networks to take hate speech off the internet. This would include fining social media giant Facebook for violations.

The measure adopted Thursday by the lower house of the French parliament would require social networks to remove hate speech within 24 hours of a confirmed violation. Search engines would have to stop referencing the content as well.

The provision, part of a bill on internet regulation, targets videos or messages inciting or glorifying terrorism, hate, violence, or racist or religious abuse. Violators could face hefty fines.

It prompted heated discussion in the National Assembly over how to define hate speech.

French President Emmanuel Macron proposed such a law earlier this year amid an uptick in anti-Semitic incidents in France and concerns about increasing extremist language online.

Aleksandr Kogan Drops Facebook Lawsuit

Aleksandr Kogan, the data scientist at the center of Facebook’s Cambridge Analytica privacy scandal, said he is dropping a defamation lawsuit against the social network rather than engage in an expensive, drawn-out legal battle.

Kogan, 33, sued the social giant in March, claiming it scapegoated him to deflect attention from its own misdeeds, thwarting his academic career in the process. The suit sought unspecified monetary damages and a retraction and correction of what Kogan said were “false and defamatory statements.”

“We thought there was a one percent chance they would do the right thing,” Kogan told media outlets. Facebook is “brilliant and ruthless,” he added. “And if you get in their way they will destroy you.”

A Facebook spokesperson said the company had “no comment to share concerning this development.”

The former Cambridge University psychology professor created an online personality test app in 2014 that vacuumed up the personal data of as many as 87 million Facebook users. The vast majority of those were unwitting online friends of the roughly 200,000 people Kogan says were paid about $4 to participate in his “ThisIsYourDigital Life” quiz.

Cambridge Analytica, a political data-mining firm founded by conservative power brokers including billionaire Robert Mercer and former White House aide Steve Bannon, paid Kogan $800,000 to conduct his research and to provide the firm with a copy of the data. The project’s aim was to create voter profiles based on Facebook users’ online behavior to help in tailored political-ad targeting, according to Christopher Wylie, a former data scientist at the firm.

In March 2018, when the scandal broke, Facebook executives charged that Kogan had lied to them about how the data he harvested would be used. Facebook deputy general counsel Paul Grewal claimed at the time in a statement to The New York Times that Kogan perpetrated “a scam — and a fraud.” CEO Mark Zuckerberg accused Kogan of violating Facebook rules “to gather a bunch of information, sell it or share it in some sketchy way.”

Kogan said such accusations were “either unfair or untrue.” Facebook shut down Kogan’s app in late 2015 after it was exposed in press accounts and he said he then destroyed his copy of the rogue data at its request. But it didn’t ban him from the social media platform until the Cambridge Analytica scandal broke last year.

Evidence presented to a U.K. parliamentarycommittee indicated that Cambridge Analytica had not deleted the Kogan-acquired dataset on 30 million Facebook users by February 2016. Britain’s Information Commissioner’s Office said Cambridge Analytica used some of that data “to target voters during the 2016 U.S. presidential campaign process.” Data collected included age, gender, posts, email addresses and pages users “liked,” depending on their privacy setting, the regulator said.

Cambridge Analytica worked for the eventual 2016 GOP presidential nominee, Donald Trump. Had Trump not won the election, “my life (would be) very different,” Kogan said.

Kogan and other developers say Facebook allowed such wholesale gathering of friend data at the time, although access was later throttled back for all but select partners.

“They created these great tools for developers to collect the data and made it very easy. This is not a hack. This was ’Here’s the door. It’s open. We’re giving away the groceries. Please collect them,” Kogan told CBS News’ 60 Minutes last year.

Other developers tell similar tales of Facebook’s lax attitude toward user data and their own naïve complicity. If true, Facebook would have been in direct violation of a 2011 consent order with the Federal Trade Commission for allowing third-party apps like Kogan’s to collect data on users without their knowledge or consent.

Kogan’s university appointment ended in September, his company has gone bust and he has been doing freelance programming, he said. “I think it would be damn near impossible to get an academic job,” Kogan said by phone from Buffalo, New York, where he currently lives with his wife.

Facebook’s privacy transgressions are also the subject of investigations in Europe and by a number of U.S. state attorney generals. Canada has sued the company over its alleged failure to protect user data, as has the attorney general of the District of Columbia. As well, A federal judge in northern California last month allowed a class action lawsuit over Facebook’s privacy transgressions to move forward.

Kogan told media outlets he now regrets invading so many people’s privacy. “In hindsight, it was clearly a really bad idea to do that project.”