Read With Me If You Want To Live…Killer Robot Weapons Debate Late

Click to read the full story: Read With Me If You Want To Live…Killer Robot Weapons Debate Late

Should We Be Using Robots as Weapons?

Is it ethical to use robots, otherwise known as Lethal Autonomous Weapons Systems (LAWS) as weapons of war? Should we even use robots at all to kill human enemies or wanted targets? Robots may be accurate, powerful, expendable and intelligent but they lack compassion and wisdom. They act on parameters given to them that constitute their artificial intelligence but what if those parameters are not enough compared to the complexity of the human brain; for example, that gut instinct to shoot or to spare a child combatant or mortally wounded target? It’s all been laid out in the world of science fiction. There are many things that could go wrong. Movies like I Robot, Stealth, Robocop, The Avengers 2 and especially the Terminator franchise discuss how overlooked parameters and hardware errors can turn robots endowed with artificial intelligence against their masters. But humans are smart enough to build fail safes and kill switches (if they can be used in time). The real question is whether it’s moral or ethical to use LAWS during military operations?

In times of war, there are certain criteria that must be followed. Like the three laws in I Robot, these criteria indicated in the 1949 Geneva Convention must be followed in the field of battle. Attacks must be carried out, out of necessity, warriors must be able to distinguish between combatants and non-combatants and whether the value of the objective is higher than the collateral damage. Seems easy enough but the additional 1977 Martens Clause also states that weapons used must satisfy the ‘principles of humanity and the dictates of public conscience.’ LAWS might be able to satisfy the first three but the fourth is highly subjective. Within those principles lie compassion and political correctness, something current AI technology will be pressed to emulate. Even so, many people are hard-pressed to accept allowing machines to decide who gets to live or die even in times of war.

The Law of Conservation of Man

In movies that tackle robotic soldiers, most recently Iron Man 2 and even Iron Man 3, robots can be deployed in battle instead of humans ‘in order to save lives’. It’s also the argument used when the atomic bombs were used in Japan so instead of prolonging the war killing millions more using conventional warfare, let’s give them a good scare. It worked before and it could work again. Instead of using nuclear weapons, we’ll be using robots. Iron Man 2 was a good example. It featured replacing actual soldiers with drones for all branches of the military and those drones had limited AI. Give them a target and they can attack, pursue and work around obstacles and crowds. So instead of enlisting American citizens for military duty, the US can just manufacture its soldiers and simply upload their training. Apart from saving lives, the US can save money in the long run on food, medicine, training and veterans’ paychecks. Financial efficiency is still the bottom line but ‘saving lives of enlisted personnel’ gets the citizenry every time.

This is now partially true of drone-using countries. Drones are basically flying robots used for patrol or search-and-destroy missions. These drones have limited AI for navigation, flight correction and targeting but they can still need to be activated, flown and guided by an operator. If the drone hits a civilian-populated area in search of its target, the operator is liable to sanctions and the erring country will later have to answer for the loss of innocent lives. But if the drone is successful, that single unit has saved the lives of a platoon or squad of soldiers if the operation had been conventional. In Iron Man 3, one could say that if ordinary soldiers were sent against the Extremis-powered enemies to save the president, the soldiers could have been wiped out. Thank JARVIS.

The Erring Human Element

Which brings us why LAWS are in the works. Human operators can make mistakes. Human operators can disobey or hesitate and jeopardize an operation. A wrong press of a button can send a drone crashing or shooting at the wrong targets. But LAWS with a good AI will not disobey orders, will not hesitate and theoretically cannot make mistakes. They will perform as ordered and will not stop until the mission is complete, cancelled or the unit is destroyed; and the probability is high that they won’t miss.

The Wisdom of Building First and Building Better

In the movie The Core, Project DESTINI which has the ability to induce targeted earthquakes accidentally stopped the earth’s core from rotating. Why was such a machine built? The primary argument was that there were other countries that might build a similar device. It’s the same concept with LAWS. Though morally questionable, research and development continues because if we thought of building it, other countries are thinking about it as well. It’s more prudent to be the first and t has to be better than the rest.

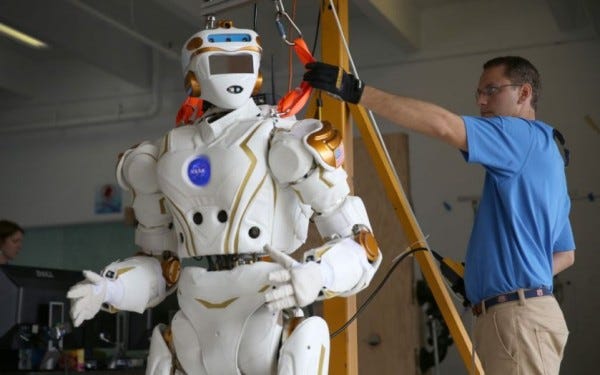

Advanced robotics technology is already available. Bipedal robots are already available like Honda’s Asimov program. There are already kits available to make mini androgynous robots that can dance, ride bicycles and participate in robot wrestling battles. Drone technology is now widely available and on the cheap. With proper AI and weapons, these kits can easily become tools of war.

That’s the reason why the US and Great Britain are pursuing the development of LAWS. With the technology we have today, states like Iran, North Korea, China and Russia may already have their prototypes. It’s a matter of national security that the US should be first and better.

The US Defense Advanced Research Projects Agency (DARPA) already has programs similar to LAWS. Fast Lightweight Autonomy (FLA) and Collaborative Operations in Denied Environment (CODE). Both projects involved aerial drones that are able to move into enemy territory and perform the necessary steps of a strike mission. These will be able to act independently in case signals get jammed.

Is Artificial Intelligence Intelligent Enough?

There are no details on how well CODE and FLA are able to perform strike missions. Weapons, no problem but s their AI sufficient? In Iron Man 2, one of the drones after Iron Man showed that the AI had trouble differentiating a child in costume from the real thing. In Iron Man 3, even JARVIS couldn’t differentiate between Pepper Potts and the other Extremis powered enemies. It’s because the AI were given very specific instructions. How far have we gone in the studies of artificial intelligence? Can we give LAWS enough smarts to follow the Geneva Convention during battle so that if an opponent throws away his/her weapons, the opponent will be spared and an arrest protocol kicks in?

And what if we have gone too far in the studies of artificial intelligence and we do end up with something like Hal, VIKI, Virus, Ultron and Skynet? The way things are right now, VIKI, Virus and Skynet has a point.

Overkill

Speaking of going too far, if those drones in Iron Man 2 were to be deployed in Afghanistan against guerillas that don’t have full battle gear. The enemy would be so unmatched as to be practically defenseless. Isn’t killing a defenseless opponent tantamount to murder? If we were to get more real, if an autonomous flying drone were to attack the same target using machineguns meant for other aircraft and able to hit a target a thousand feet away, wouldn’t that practically be murder? Using drones in targeting terror targets in populated areas is already considered overkill.

Laws on LAWS

So if DARPA’s CODE is able to perform unguided strike missions, is killing included? If so, it seems that giving machines the power to decide who lives and who dies is somewhat irresponsible. At least when guided, the robot acts as the weapon and the operating soldier decides who and when to kill, but independently, a line of code determines life and death; it’s a very cheap way for the opponent to die. Like it or not, LAWS are already being researched and being made. New provisions to the articles of war should be made immediately to cover the use of Lethal Autonomous Weapons Systems. Germany and Japan have already expressed their distaste of removing humans from the equation, that sending overpowered LAWS against ordinary opponents is murder. But like bomb disposal robots, LAWS can or should be used just to disable or incapacitate opponents in order for a team of real soldiers to move in. Giving them too much or inadequate intelligence and the ability to kill is just asking for trouble.

The post Read With Me If You Want To Live…Killer Robot Weapons Debate Late appeared first on Movie TV Tech Geeks News By: Marius Manuella